博文

【Church - 钟摆摆得太远(4):明斯基论】

精选

精选

|||

【立委按】这一节Church回顾的是明斯基对于神经网络及其线性分隔器的严厉批评。这一阵子倒腾这个系列实在耗时太多(尽管有 Google Translate 平台的帮助),有点儿“不务正业”。虽然说对于学界教授,这样的“进口科普”也算是严肃的份内业务,但对于工业界的工匠(或工头)而言,却不宜过分投入。熬了几夜,案下也积攒了一堆日常工作,感觉还是收手为妙。为使系列完整,把有关章节原文附上。

【原立委按】【NLP主流的傲慢与偏见】系列刚写了三篇。中国NLP(Natural Language Processing)前辈董振东老师来函推荐两篇来自主流的反思文章。董老师说,主流中有识之士对深陷成见之中的NLP一边倒的状况,有相当忧虑和反思。

Church (2011) 对NLP的回顾和反思的文章【钟摆摆得太远】 (A Pendulum Swung Too Far)是一篇杰作,值得反复研读。文章在语言研究中经验主义和理性主义此消彼长循环往复的大背景下,考察NLP最近20年的历程以及今后20年的趋势。它的主旨是,我们这一代NLP学者赶上了经验主义的黄金时代(1990迄今),把唾手可得的果子统统用统计摘下来了,留给下一代NLP学人的,都是高高在上的果实。20多年统计一边倒的趋势使得我们的NLP教育失之偏颇,应该怎样矫正才能为下一代NLP学人做好创新的准备,结合理性主义,把NLP推向深入?忧思溢于言表。原文很长,现摘要译介如下。

【Church - 钟摆摆得太远(4)】

四 明斯基对神经网络的批评

明斯基和帕佩特表明,感知机(更广泛地说是线性分离机)无法学会那些不可线性分离的功能,如异或(XOR) 和连通性(connectedness)。在二维空间里,如果一条直线可以将标记为正和负的点分离开,则该散点图即线性可分。推广到n 维空间,当有n -1 维超平面能将标记为正和负的点分离开时,这些点便是线性可分的。

判别类任务

对感知机的反对涉及许多流行的机器学习方法,包括线性回归(linear regression)、logistic 回归(logistic regression)、支持向量机(SVMs) 和朴素贝叶斯(Naive Bayes)。这种反对意见对信息检索的流行技术,例如向量空间模型 (vector space model) 和概率检索(probabilistic retrieval) 以及用于模式匹配任务的其他类似方法也都适用,这些任务包括:

词义消歧(WSD):区分作为“河流”的bank 与作为“银行”的bank。

作者鉴定:区分《联邦党人文集》哪些是汉密尔顿(Hamilton)写的,哪些是麦迪逊(Madison)写的。

信息检索(IR) :区分与查询词相关和不相关的文档。

情感分析:区分评论是正面的还是负面的。

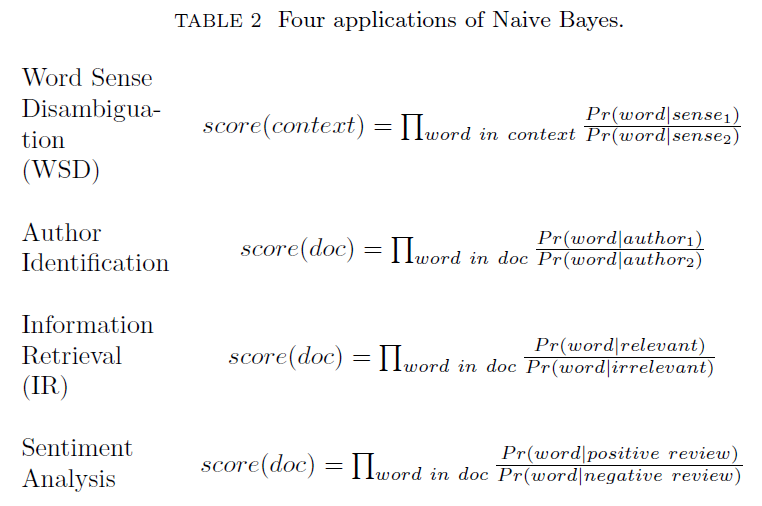

机器学习方法,比如朴素贝叶斯,经常被用来解决这些问题。例如,莫斯特勒(Mosteller) 和华莱士(Wallace) 的鉴定工作始于《联邦党人文集》,共计85篇文章,其作者是麦迪逊、汉密尔顿和杰伊(Jay)。其中多数文章的作者是明确的,但有十几篇仍具争议。于是可以把多数文章作为训练集建立一个模型,用来对有争议的文件做判别。在训练时,莫斯特勒和华莱士估算词汇表中的每个词的似然比:Pr(word|Madison)/Pr(word|Hamilton)。对有争议的文章通过文中每个词的似然比的乘积打分。其他任务也使用几乎相同的数学公式,如表2 所示。近来,诸如logistic 回归等判别式学习方法正逐步取代如朴素贝叶斯等生成式学习方法。但对感知机的反对意见同样适用于这两类学习方法的多种变体。

停用词表、词权重和学习排名

虽然表2 中4 个任务的数学公式类似,但在停用词表(stoplist)上仍有重要的区别。信息检索最感兴趣的是实词,因此,常见的做法是使用一个停用词表去忽略功能词,如“the”。与此相对照,作者鉴定则把实词置于停用词表中,因为此任务更感兴趣是风格而不是内容。

文献中有很多关于词权重的讨论。词权重可以看作是停用词表的延伸。现今的网络搜索引擎普遍使用现代的机器学习方法去学习最优权重。学习网页排名的算法可以利用许多特征。除了利用文档特征对作者写什么进行建模外,还可以利用基于用户浏览记录的特征,来对用户在读什么建模。用户浏览记录(尤其是点击记录)往往比分析文档本身信息量更大,因为网络中读者比作者多得多。搜索引擎可以通过帮助用户发现群体智能来提升价值。用户想知道哪些网页很热门(其他和你类似的用户在点击什么)。学习排名是一种实用的方法,采用了相对简单的机器学习和模式匹配技术来巧妙地应对可能需要完备人工智能理解(AIcomplete understanding) 的问题。

最近有博客这样讨论网页排名的机器学习:

“与其试图让计算机理解内容并判别文档是否有用,我们不如观察阅读文档的人,来看他们是否觉得文章有用。

人类在阅读网页,并找出哪些文章对自己有用这方面是很擅长的。计算机在这方面则不行。但是,人们没有时间去汇总他们觉得有用的所有网页,并与亿万人分享。而这对计算机来说轻而易举。我们应该让计算机和人各自发挥特长。人们在网络上搜寻智慧,而计算机把这些智慧突显出来。”

为什么当前技术忽略谓词

信息检索和情感分析的权重系统趋向于专注刚性指示词(rigid designators)14(例如名词),而忽略谓词(动词、形容词和副词)、强调词(例如“非常”)和贬义词15(例如“米老鼠(Mickey mouse)”16 和“ 破烂儿(rinky dink)”)。其原因可能与明斯基和帕佩特对感知机的反对有关。多年前,我们有机会接触MIMS 数据集,这是由AT & T 话务员收集的评论(建议与意见)文本。其中一些评论被标注者标记为正面、负面或中性。刚性指示词(通常是名词)往往与上述某一类标记(正面、负面或中性)紧密关联,但也有一些贬义词标记不是正面就是负面,很少中性。

贬义词怎么会标记为正面的呢?原来,当贬义词与竞争对手相关联的时候,标注者就把文档标为对我方“正面”;当贬义词与我方关联的时候,就标注为对我方“负面”。换句话说,这是一种异或依存关系(贬义词XOR 我方),超出了线性分离机的能力。

情感分析和信息检索目前的做法不考虑修饰成分(谓词与论元的关系,强调词和贬义词),因为除非你知道它们在修饰什么,否则很难理解修饰成分的意义。忽视贬义词和强调词似乎是个遗憾,尤其对情感分析,因为贬义词显然表达了强烈的意见。但对于一个特征,如果你不知道其正负,即使强度再大也没什么用。

当最终对谓词- 论元关系建模时,由于上述异或问题,我们需要重新审视对线性可分的假设。

【原文】

1.3.2 Minsky’s Objections

Minsky and Papert (1969) showed that perceptrons (and more generally, linear separators) cannot learn functions that are not linearly separable such as XOR and connectedness. In two dimensions, a scatter plot is linearly separable when a line can separate the points with positive labels from the points with negative labels. More generally, in n dimensions, points are linearly separable when there is a n−1 dimensional hyperplane that separates the positive labels from the negative labels.

Discrimination Tasks

The objection to perceptrons has implications for many popular machine learning methods including linear regression, logistic regression, SVMs and Naive Bayes. The objection also has implications for popular techniques in Information Retrieval such as the Vector Space Model and Probabilistic Retrieval, as well as the use of similar methods for other pattern matching tasks such as:

Word-Sense Disambiguation (WSD): distinguish “river” bank from “money” bank.

Author Identification: distinguish the Federalist Papers written by Madison from those written by Hamilton.

Information Retrieval (IR): distinguish documents that are relevant to a query from those that are not.

Sentiment Analysis: distinguish reviews that are positive from reviews that are negative.

Machine Learning methods such as Naive Bayes are often used to address these problems. Mosteller and Wallace (1964), for example, started with the Federalist Papers, a collection of 85 essays, written by Madison, Hamilton and Jay. The authorship has been fairly well established for the bulk of these essays, but there is some dispute over the authorhship for a dozen. The bulk of the essays are used as a training set to fit a model which is then applied to the disputed documents. At training time, Mosteller and Wallace estimated a likelihood ratio for each word in the vocabulary: Pr(word|Madison)/Pr(word|Hamilton). Then the disputed essays are scored by multiplying these ratios for each word in the disputed essays. The other tasks use pretty much the same mathematics, as illustrated in Table 2.

More recently, discriminative methods such as logistic regression have been displacing generative methods such as Naive Bayes. The objections to perceptrons apply to many variations of these methods including both discriminative and generative variants.

Stoplists, Term Weighting and Learning to Rank

Although the mathematics is similar across the four tasks in Table 2, there is an important difference in stop lists. Information Retrieval tends to be most interested in content words, and therefore, it is common practice to use a stop list to ignore function words such as “the.”

In contrast, Author Identification places content words on a stop list, because this task is more interested in style than content. The literature has quite a bit of discussion on term weighting. Term weighting can be viewed as a generalization of stop lists. In modern web search engines, it is common to use modern machine learning methods to learn optimal weights. Learning to rank methods can take advantage of many features. In addition to document features that model what the authors are writing, these methods can also take advantage of features based on user logs that model what the users are reading. User logs (and especially click logs) tend to be even more informative than documents because the web tends to have more readers than writers. Search engines can add value by helping users discover the wisdom of the crowd. Users want to know what’s hot (where other users like you are clicking). Learning to rank is a pragmatic approach that uses relatively simple machine learning and pattern matching techniques to finesse problems that might otherwise require AI-Complete Understanding.

Here is a discussion on learning to rank from a recent blog:16

Rather than trying to get computers to understand the content and whether it is useful, we watch people who read the content and look at whether they found it useful.

People are great at reading web pages and figuring out which ones are useful to them. Computers are bad at that. But, people do not have time to compile all the pages they found useful and share that information with billions of others. Computers are great at that. Let computers be computers and people be people. Crowds find the wisdom on the web. Computers surface that wisdom.

1.3.3 Why Current Technology Ignores Predicates

Weighting systems for Information Retrieval and Sentiment Analysis tend to focus on rigid designators (e.g., nouns) and ignore predicates (verbs, adjectives and adverbs) and intensifiers (e.g., “very”) and loaded terms (e.g., “Mickey Mouse” and “Rinky Dink”). The reason might be related to Minsky and Papert’s criticism of perceptrons. Years ago, we had access to MIMS, a collection of text comments collected by AT&T operators. Some of the comments were labeled by annotators as positive, negative or neutral. Rigid designators (typically nouns) tend to be strongly associated with one class or another, but there were quite a few loaded terms that were either positive or negative, but rarely neutral.

Current practice in Sentiment Analysis and Information Retrieval does not model modifiers (predicate-argument relationships, intensifiers and loaded terms), because it is hard to make sense of modifiers unless you know what they are modifying. Ignoring loaded terms and intensifiers seems like a missed opportunity, especially for Sentiment Analysis, since loaded terms are obviously expressing strong opinions. But you can’t do much with a feature if you don’t know the sign, even if you know the magnitude is large.

1.3.5 Those Who Ignore History Are Doomed To Repeat It

For the most part, the empirical revivals in Machine Learning, Information Retrieval and Speech Recognition have simply ignored PCM’s arguments, though in the case of neural nets, the addition of hidden layers to perceptrons could be viewed as a concession to Minsky and Papert. Despite such concessions, Minsky and Papert (1988) expressed disappointment with the lack of progress since Minsky and Papert (1969).

In preparing this edition we were tempted to “bring those theories up to date.” But when we found that little of significance had changed since 1969, when the book was first published, we concluded that it would be more useful to keep the original text. . . and add an epilogue. . . . One reason why progress has been so slow in this field is that researchers unfamiliar with its history have continued to make many of the same mistakes that others have made before them. Some readers may be shocked to hear it said that little of significance has happened in the field. Have not perceptron-like networks–under the new name connectionism-become a major subject of discussion. . . . Certainly, yes, in that there is a great deal of interest and discussion. Possibly yes, in the sense that discoveries have been made that may, in time, turn out to be of fundamental importance. But certainly no, in that there has been little clear-cut change in the conceptual basis of the field. The issues that give rise to excitement today seem much the same as those that were responsible for previous rounds of excitement. . . . Our position remains what it was when we wrote the book: We believe this realm of work to be immensely important and rich, but we expect its growth to require a degree of critical analysis that its more romantic advocates have always been reluctant to pursue–perhaps because the spirit of connectionism seems itself to go somewhat against the grain of analytic rigor.29

https://blog.sciencenet.cn/blog-362400-713016.html

上一篇:【Church - 钟摆摆得太远(5):现状与结论】

下一篇:作业:关于体温37C原因的解释

【NLP主流的反思:Church - 钟摆摆得太远(1)】

【NLP主流的反思:Church - 钟摆摆得太远(1)】